In Review

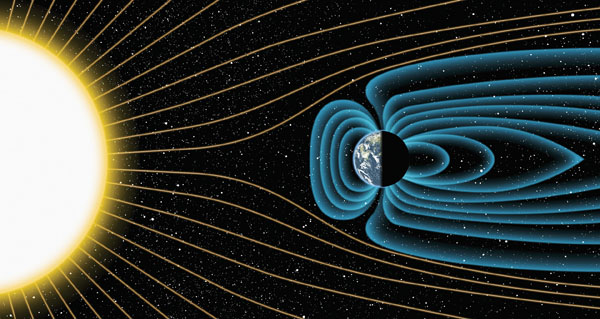

FIELD SHIELD: An artist’s depiction (not to scale) of Earth’s magnetic field deflecting highenergy protons from the sun four billion years ago (Photo: Michael Osadciw)

FIELD SHIELD: An artist’s depiction (not to scale) of Earth’s magnetic field deflecting highenergy protons from the sun four billion years ago (Photo: Michael Osadciw)Since 2010, the best estimate of the age of Earth’s magnetic field has been 3.45 billion years. But a researcher responsible for that finding has new data showing the magnetic field is far older.

John Tarduno, professor in the Department of Earth and Environmental Sciences and a leading expert on Earth’s magnetic field, and his team say they now believe the field is at least 4 billion years old. The findings were published in the journal Science.

The magnetic field helps to prevent solar winds—streams of charged particles shooting from the sun—from stripping away the Earth’s atmosphere and water that make life on the planet possible.

A record of that field’s direction and intensity can be found in minerals from the earliest periods of Earth’s history. Tarduno’s new results are based on a record of magnetic field strength fixed within magnetite found in zircon crystals—formed more than a billion years ago—from the Jack Hills of Western Australia.

“We know the zircons have not been moved relative to each other from the time they were deposited,” he says. “As a result, if the magnetic information in the zircons had been erased and re-recorded, the magnetic directions would have all been identical.”

Instead, he found that the minerals revealed varying magnetic directions—convincing him that the intensity measurements recorded in the samples were as old as four billion years. The findings support previous zircon measurements that suggest an age of 4.4 billion years for the magnetic field.

In a separate study, a team led by Tarduno found that a region of the Earth’s core beneath southern Africa may play a special role in reversals of the planet’s magnetic poles. Thanks to their knowledge of ancient African practices—in this case, the ritualistic cleansing of villages by burning down huts and grain bins—the researchers were able to assemble data about the magnetic field in the Iron Age. When burning, the clay floors reached a temperature of more than 1,000 degrees Celsius, hot enough to erase the magnetic information stored in the magnetite and create a new record of the magnetic field strength and direction at the time of the burning.

Reversals of the North and South Poles have occurred irregularly throughout history, with the last one taking place about 800,000 years ago. Once a reversal starts, it can take as long as 15,000 years to complete. The new data suggests the core region beneath southern Africa may be the birthplace of some of the more recent and future pole reversals.

Results of the study were published in the journal Nature Communications.

—Peter Iglinski

Young Adults’ Social Lives Can Predict Midlife Well-Being

It’s well known that social connections promote psychological and overall health. Now research shows that the quantity of social interactions a person has at age 20—and the quality of social relationships at 30—can bring benefits later in life.

The 30-year longitudinal study, published in the journal Psychology and Aging, shows that frequent social interactions at age 20 provide psychological tools to be drawn on later: they help people figure out who they are, the researchers say.

Surprisingly, the study suggests that having a high number of social interactions at 30 doesn’t have psychosocial benefits later.

But 30-year-olds who reported having intimate and satisfying relationships also reported high levels of well-being at midlife. Meaningful social engagement was beneficial at any age, but more so at 30 than 20.

Cheryl Carmichael ’11 (PhD) conducted the research as a doctoral candidate. She’s now an assistant professor of psychology at Brooklyn College and the Graduate Center, City University of New York.

—Monique Patenaude

Nursing Home Care for Minorities Improves

While disparities continue to exist, the quality of care in nursing homes with higher concentrations of racial and ethnic minority residents has improved—and the progress appears to be linked to increases in Medicaid payments.

That’s according to a new study published in the journal Health Affairs and led by Yue Li, associate professor in the Department of Public Health Sciences.

There are an estimated 1.3 million older and disabled Americans receiving care in some 15,000 nursing homes across the country. Over the past 20 years, the number of African-American, Hispanic, and Asian residents of nursing homes has increased rapidly, and now accounts for nearly 20 percent of people living in U.S. nursing homes. But the institutions remain segregated, and homes with high concentrations of racial and ethnic minorities tend to have more limited financial resources, employ fewer nurses, and provide a lower level of care.

State Medicaid programs are the dominant source of funding for nursing homes, providing roughly half of total payments for long-term care. In recent years, states have tried to influence the quality of care by increasing reimbursement rates and linking those payments to improvements.

In a study using data from more than 14,000 nursing homes from 2006 to 2011, the researchers found that reported deficiencies in clinical and personal care and safety declined in nursing homes with both low and high concentrations of minorities. They also compared these trends to state Medicaid reimbursement rates and found that an increase of $10 per resident per day was associated with a reduction in the number of reported clinical-care deficiencies.

—Mark Michaud

Stress in Low-Income Families Can Affect Children’s Learning

Children living in low-income households who endure family instability and emotionally distant caregivers are at risk of having impaired cognitive abilities, according to new research from the Mt. Hope Family Center.

The study of more than 200 low-income mother-and-child pairs tracked levels of the stress hormone cortisol in children at ages two, three, and four. It found that specific forms of family adversity are linked to both elevated and low cortisol levels in children—and kids with such levels, high or low, also had lower-than-average cognitive ability at age four.

Family instability includes frequent changes in care providers, household members, or residence.

Such instability, the researchers say, reflects a general breakdown of the family’s ability to provide a predictable environment for children.

“We saw really significant disparities in children’s cognitive abilities at age four—right before they enter kindergarten,” says lead author Jennifer Suor, a doctoral candidate in clinical psychology. “Some of these kids are already behind before they start kindergarten, and there is research that shows that they’re unlikely to catch up.” She adds that the findings support the need for investment in community-based interventions that can strengthen parent-child relationships and reduce family stress early in a child’s life.

The study was published in the journal Child Development.

—Monique Patenaude

GREAT EXPECTATIONS: Babies’ brains respond not just to what they see, but also to what they expect to see, a kind of neural processing formerly thought only to happen in adults. (Photo: iStockphoto)

GREAT EXPECTATIONS: Babies’ brains respond not just to what they see, but also to what they expect to see, a kind of neural processing formerly thought only to happen in adults. (Photo: iStockphoto)Babies’ Expectations May Help Brain Development

Infants can use their expectations about the world to rapidly shape their developing brains. That’s the finding of researchers at Rochester and the University of South Carolina, who performed a series of experiments with infants five to seven months old. Their study showed that portions of babies’ brains responsible for visual processing respond not just to the presence of visual stimuli, but also to the mere expectation of such stimuli.

That type of complex neural processing was once thought to happen only in adults, not in infants, whose brains are still developing important neural connections. The study was published in the Proceedings of the National Academy of Sciences and was led by Lauren Emberson while she was postdoctoral fellow at Rochester’s Baby Lab. She is now an assistant professor in psychology at Princeton. Richard Aslin, the William R. Kenan Jr. Professor in the Department of Brain and Cognitive Sciences and codirector of the lab, also authored the study.

The researchers exposed one group of infants to a sequential pattern that included a sound—like a rattle or a honk—followed by an image of a red cartoon smiley face. Another group saw and heard the same things but without any pattern. After a little more than a minute, researchers began omitting the image 20 percent of the time.

By using light to measure oxygenation in regions of the babies’ brains, the scientists were able to see activity in the visual areas of the brains of infants exposed to the pattern—even when the image didn’t appear as expected.

“There’s a lot of work that shows babies do use their experiences to develop,” Emberson says. “That’s sort of intuitive, especially if you’re a parent, but we have no idea how the brain is actually using the experiences.”

—Monique Patenaude