Vision and art have always played a large role in Michele Rucci’s life.

Vision and art have always played a large role in Michele Rucci’s life.

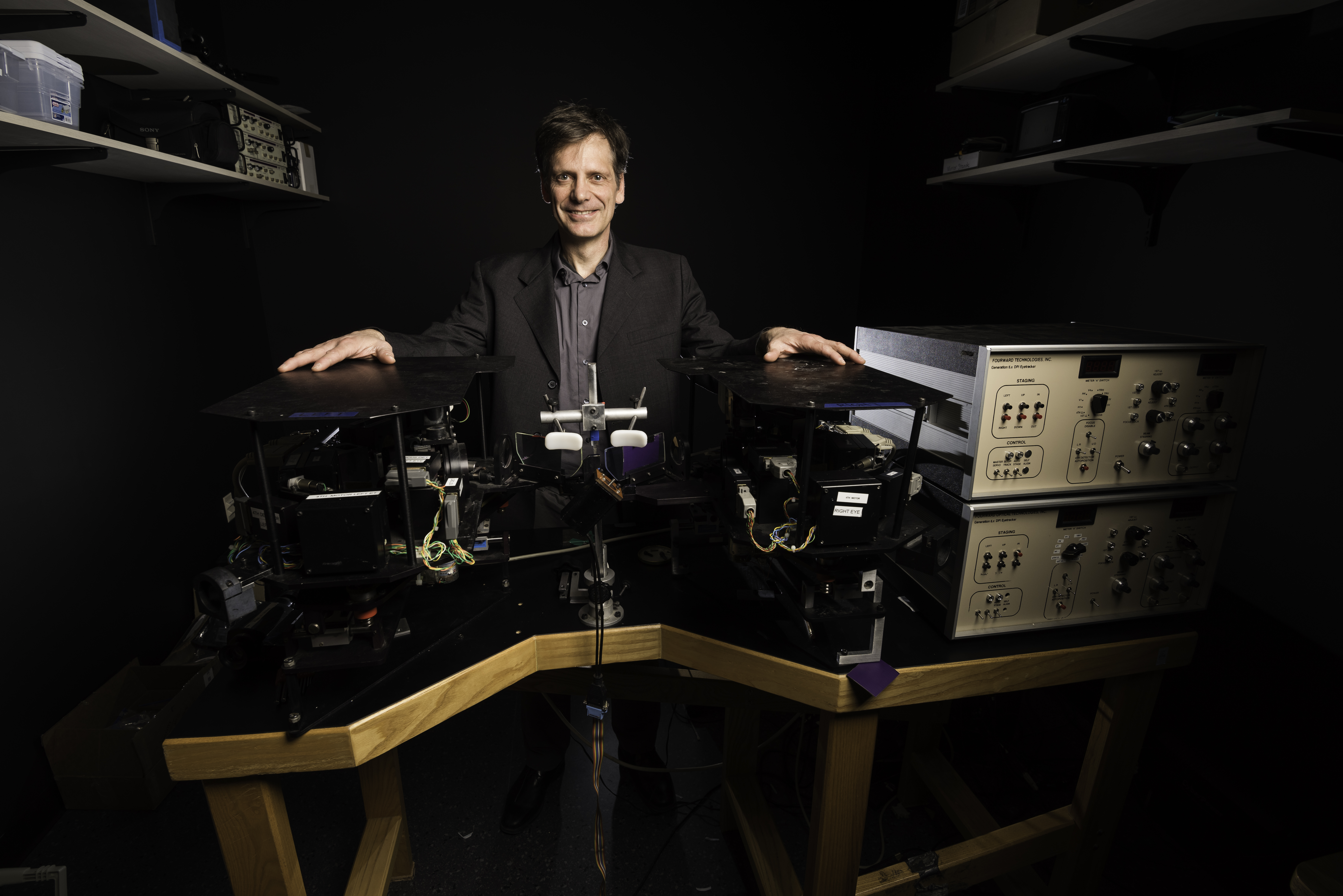

“Visual arts is a big component of where I come from,” says Rucci, a native of Florence, Italy, and a new professor of brain and cognitive sciences at the University of Rochester. “I’ve always been interested in how light gives rise to subjective experiences and how humans interpret it.”

At the University of Florence and later the Scuola Superiore S. Anna in Pisa, Rucci studied biomedical engineering and the ways machines extract information to build an image. He continued this work as a professor at Boston University, focusing on visual perception in humans.

Using a combination of head- and eye-tracking tools, virtual reality, and robots, Rucci’s current research brings together aspects of neuroscience, engineering, and computer science to study how we see.

What are some of the questions your lab is trying to answer?

We are interested in how vision works in humans. How do photons of light impinging on the retina get transformed into a world that is meaningful to us? How do we recognize faces? How do we reach for objects? The way we approach these questions is by studying vision together with the motor activities and behaviors we as humans perform.

What kinds of motor activities and behaviors?

Primarily movements of your eyes, neck, head. The way you move profoundly shapes the input to your retina. Much of our work has focused on small eye movements that humans are not even aware of performing.

What are these small eye movements?

Normally our eyes fixate on a point for only a fraction of a second, then jump to another point and so on. Even within that short interval, when we think our gaze is fixated on one point, in reality it moves constantly because of different types of small eye movements. Together with larger, voluntary gaze shifts, these small eye movements allow us to extract information piece by piece and then build a coherent percept of, for example, a person’s face.

Why is it important to study small eye movements if we aren’t even aware we are making them?

There are many reasons. They are always there, even when we are attempting to maintain a steady gaze on a point. Most importantly for us, these small eye movements are the last, quintessential, token of behavior that we need to still be able to see. If we eliminate them in the laboratory, our percepts fade away. There is no vision when there is no behavior. Many researchers thought small eye movements were random, but we found they are not. Humans are not aware of making these movements, but their oculomotor systems [part of the central nervous system] control the movements to an amazing degree of precision.

What are some of the methods and tools you use to study small eye movements?

We have different types of devices that measure eye movements with complementary advantages and limitations. Some of these eye trackers require the head of the observer to be carefully immobilized, others do not. One of them, for instance, is head-mounted: it has a helmet and a virtual reality display so we can manipulate the visual input signals experienced by the observer while he/she normally explores a scene.

We also have a robot named “Mr. T” designed to replicate the retinal input experienced by humans. Its neck and cameras move in ways that mimic human head and eye movements. We use this robot to expose our computer models of neural pathways to realistic visual input signals.

So you build the robot to study human vision, and you also study human vision to build a robot?

Exactly. A goal of the lab is to apply what we learn from human vision to robotic vision. One of the projects with Mr. T involved learning how to reach objects, and in order to do that, the robot needs to learn to judge distances. We replicated human head-eye coordination to gain information about distance and calibrated artificial neural networks. Another project used similar head/eye strategies to segment an image into meaningful parts. We use robots to learn about the human visual system, as well as improve the robots themselves.

Can your research help determine what goes wrong in visual disorders?

We are opening the door for the importance of behavior in visual perception. One of the emerging hypotheses is that abnormal motor control, especially at the scale of small eye movements, might contribute to visual impairments currently believed to be the consequences of abnormal visual processing. This is something we are starting to study right now with high-acuity visual impairments.

What are some of your other future research objectives?

Here in Rochester there is a long history of vision research and vision applications. It would be nice to collaborate with scientists at the University who study the optics of the eye and the retina and the physiology of the visual system. An important goal in the future is to see what happens to small eye movements in people who have different types of visual impairments. This research may provide critical information in the future design of visual prosthetics.