A natural language model developed in the Robotics and Artificial Intelligence Laboratory allows a user to speak a simple command, which the robot can translate into an action. If the robot is given a command to pick up a particular object, it can differentiate between other objects nearby, even if they are identical in appearance.

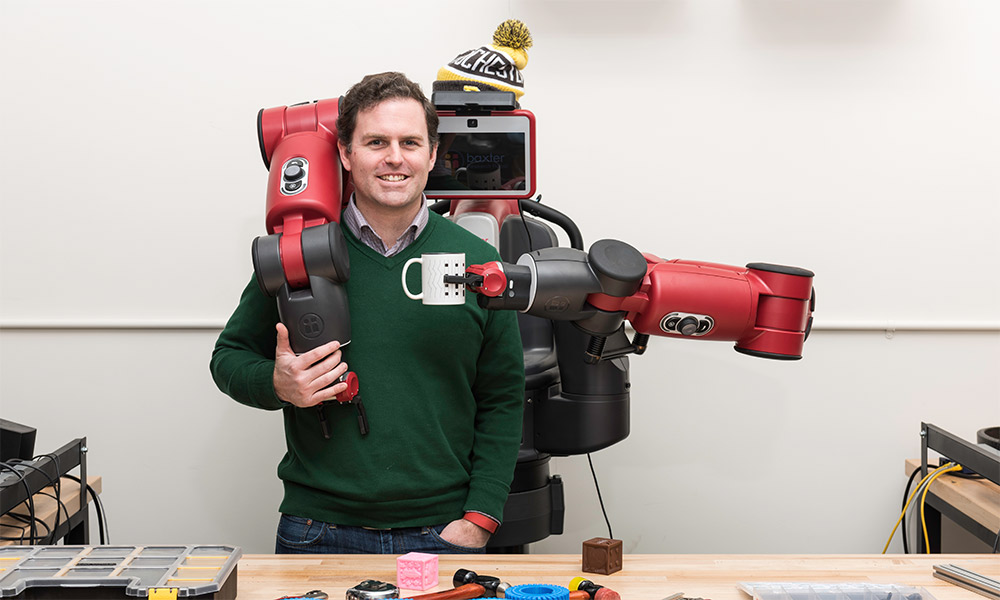

Inside the University of Rochester’s Robotics and Artificial Intelligence Laboratory, a robotic torso looms over a row of plastic gears and blocks, awaiting instructions. Next to him, Jacob Arkin ’13, a doctoral candidate in electrical and computer engineering, gives the robot a command: “Pick up the middle gear in the row of five gears on the right,” he says to the Baxter Research Robot. The robot, sporting a University of Rochester winter cap, pauses before turning, extending its right limb in the direction of the object.

Unlocking big data

A Newscenter series on how Rochester is using data science to change how we research, how we learn, and how we understand our world.

Baxter, along with other robots in the lab, is learning how to perform human tasks and to interact with people as part of a human-robot team. “The central theme through all of these is that we use language and machine learning as a basis for robot decision making,” says Thomas Howard ’04, an assistant professor of electrical and computer engineering and director of the University’s robotics lab.

Machine learning, a subfield of artificial intelligence, started to take off in the 1950s, after the British mathematician Alan Turing published a revolutionary paper about the possibility of devising machines that think and learn. His famous Turing Test assesses a machine’s intelligence by determining that if a person is unable to distinguish a machine from a human being, the machine has real intelligence.

Today, machine learning provides computers with the ability to learn from labeled examples and observations of data—and to adapt when exposed to new data—instead of having to be explicitly programmed for each task. Researchers are developing computer programs to build models that detect patterns, draw connections, and make predictions from data to construct informed decisions about what to do next.

The results of machine learning are apparent everywhere, from Facebook’s personalization of each member’s NewsFeed, to speech recognition systems like Siri, e-mail spam filtration, financial market tools, recommendation engines such as Amazon and Netflix, and language translation services.

Howard and other University professors are developing new ways to use machine learning to provide insights into the human mind and to improve the interaction between computers, robots, and people.

With Baxter, Howard, Arkin, and collaborators at MIT developed mathematical models for the robot to understand complex natural language instructions. When Arkin directs Baxter to “pick up the middle gear in the row of five gears on the right,” their models enable the robot to quickly learn the connections between audio, environmental, and video data, and adjust algorithm characteristics to complete the task.

What makes this particularly challenging is that robots need to be able to process instructions in a wide variety of environments and to do so at a speed that makes for natural human-robot dialog. The group’s research on this problem led to a Best Paper Award at the Robotics: Science and Systems 2016 conference.

By improving the accuracy, speed, scalability, and adaptability of such models, Howard envisions a future in which humans and robots perform tasks in manufacturing, agriculture, transportation, exploration, and medicine cooperatively, combining the accuracy and repeatability of robotics with the creativity and cognitive skills of people.

“It is quite difficult to program robots to perform tasks reliably in unstructured and dynamic environments,” Howard says. “It is essential for robots to accumulate experience and learn better ways to perform tasks in the same way that we do, and algorithms for machine learning are critical for this.”

Jake Arkin, PhD student in electrical and computer engineering, demonstrates a natural language model for training a robot to complete a particular task.

Using machine learning to make predictions

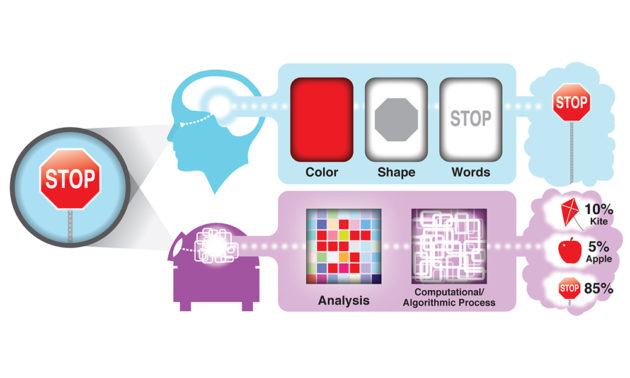

A photograph of a stop sign contains visual patterns and features such as color, shape, and letters that help human beings identify it as a stop sign. In order to train computers to identify a person or an object, the computer needs to see these features as unique patterns of data.

“For human beings to recognize another person, we take in their eyes, nose, mouth,” says Jiebo Luo, an associate professor of computer science. “Machines do not necessarily ‘think’ like humans.”

While Howard creates algorithms that allow robots to understand spoken language, Luo employs the power of machine learning to teach computers to identify features and detect configurations in social media images and data.

“When you take a picture with a digital camera or with your phone, you’ll probably see little squares around everyone’s faces,” Luo says. “This is the kind of technology we use to train computers to identify images.”

Using these advanced computer vision tools, Luo and his team train artificial neural networks—a technology of machine learning—to enable computers to sort online images and to determine, for instance, emotions in images, underage drinking patterns, and trends in presidential candidates’ Twitter followers.

Artificial neural networks mimic the neural networks in the human brain in identifying images or parsing complex abstractions by dividing them into different pieces and making connections and finding patterns. However, machines do not convey actual images as a human being would see an image; the pieces are converted into data patterns and numbers, and the machine learns to identify these through repeated exposure to data.

“Essentially everything we do is machine learning,” Luo says. “You need to teach the machine many times that this is a picture of a man, this is a woman, and it eventually leads it to the correct conclusion.”

Cognitive models and machine learning

If a person sees an object she’s never seen before, she will use her senses to determine various things about the object. She might look at the object, pick it up, and determine it resembles a hammer. She might then use it to pound things.

“So much of human cognition is based on categorization and similarity to things we have already experienced through our senses,” says Robby Jacobs, a professor of brain and cognitive sciences.

While artificial intelligence researchers focus on building systems such as Baxter that interact with their surroundings and solve tasks with human-like intelligence, cognitive scientists use data science and machine learning to study how the human brain takes in data.

“We each have a lifetime of sensory experiences, which is an amazing amount of data,” Jacobs says. “But people are also very good at learning from one or two data items in a way that machines cannot.”

Imagine a child who is just learning the words for various objects. He may point at a table and mistakenly call it a chair, causing his parents to respond, “No that is not a chair,” and point to a chair to identify it as such. As the toddler continues to point to objects, he becomes more aware of the features that place them in distinct categories. Drawing on a series of inferences, he learns to identify a wide variety of objects meant for sitting, each one distinct from others in various ways.

This learning process is much more difficult for a computer. Machine learning requires subjecting it to many sets of data in order to constantly improve.

One of Jacobs’ projects involves printing novel plastic objects using a 3-D printer and asking people to describe the items visually and haptically (by touch). He uses this data to create computer models that mimic the ways humans categorize and conceptualize the world. Through these computer simulations and models of cognition, Jacobs studies learning, memory, and decision making, specifically how we take in information through our senses to identify or categorize objects.

“This research will allow us to better develop therapies for the blind or deaf or others whose senses are impaired,” Jacobs says.

Machine learning and speech assistants

Many people cite glossophobia—the fear of public speaking—as their greatest fear.

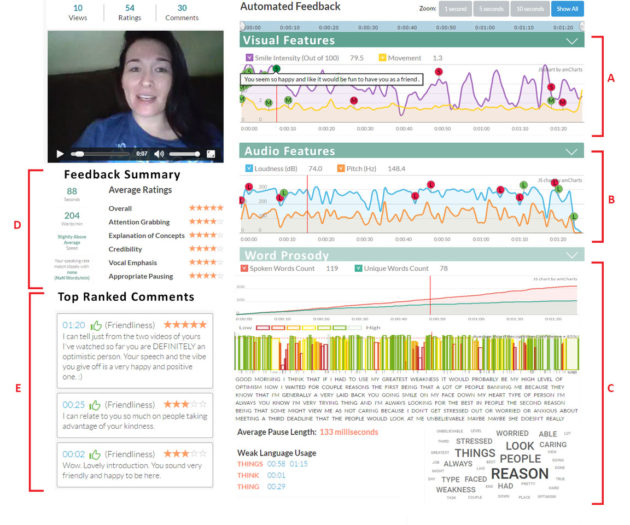

Ehsan Hoque and his colleagues at the University’s Human-Computer Interaction Lab have developed computerized speech assistants to help combat this phobia and improve speaking skills.

When we talk to someone, many of the things we communicate—facial expressions, gestures, eye contact—aren’t registered by our conscious minds. A computer, however, is adept at analyzing this information.

“I want to learn about the social rules of human communication,” says Hoque, an assistant professor of computer science and head of the Human-Computer Interaction Lab. “There is this dance going on when humans communicate: I ask a question; you nod your head and respond. We all do the dance but we don’t always understand how it works.”

In order to better understand this dance, Hoque developed computerized assistants that can sense a speaker’s body language and nuances in presentation and use those to help the speaker improve her communication skills. These systems include ROCSpeak, which analyzes word choice, volume, and body language; Rhema, a “smart glasses” interface that provides live, visual feedback on the speaker’s volume and speaking rate; and, his newest system, LISSA (“Live Interactive Social Skills Assistance”), a virtual character resembling a college-age woman who can see, listen, and respond to users in a conversation. LISSA provides live and post-session feedback about the user’s spoken and nonverbal behavior.

Hoque’s systems differ from Luo’s social media algorithms or Howard’s natural language robot models in that people may use them in their own homes. Users then have the option of sharing for research purposes the data they receive from the systems. This method allows the algorithm to continuously progress—the essence of machine learning.

“New data constantly helps the algorithm improve,” Hoque says. “This is of value for both parties because people benefit from the technology and while they’re using it, they’re helping the system get better by providing feedback.”

These systems have a wide-range of applications, including helping people to improve small talk, assisting individuals with Asperger Syndrome overcome social difficulties, helping doctors interact with patients more effectively, improving customer service training—and aiding in public speaking.

Can robots eventually mimic humans?

This is a question that has long lurked in the public imagination. The 2014 movie Ex Machina, for example, portrays a programmer who is invited to administer the Turing Test to a human-like robot named Ava. Similarly, the HBO television series Westworld depicts a Western-themed futuristic theme park populated with artificial intelligent beings that behave and emote like humans.

Although Hoque is able to model human cognition and improve the ways in which machines and humans interact, developing machines to think in the same ways as human beings or that understand and display the emotional complexity of human beings is not a goal he aims to achieve.

“I want the computer to be my companion, to help make my job easier and give me feedback,” he says. “But it should know its place.”

“If you have the option, get feedback from a real human. If that is not available, computers are there to help and give you feedback on certain aspects that humans will never be able to get at.”

Hoque cites smile intensity as an example. Through machine learning techniques, computers are able to determine the intensity of various facial expressions, whereas humans are adept at answering the question, ‘How did that smile make me feel?’

“I don’t think we want computers to be there,” Hoque says.